From Edge Impulse

About Edge Impulse

"Edge Impulse is the leading development platform for machine learning on edge devices, free for developers and trusted by enterprises." You can find the Edge Impulse training platform here.

Preliminaries

In this section of our documentation we describe how to use the Edge Impulse model training platform to train advanced machine learning models for vision tasks and deploy them seamlessly using the Nx AI cloud. To follow the documentation at this point we assume that you have access to the following:

An edge device with the Nx AI manager installed.

A camera that can be used as an input source in Nx Meta as well as in stand-alone mode.

An Edge Impulse account. Sign up for a free Edge Impulse account at https://studio.edgeimpulse.com/.

Due to policy changes, Edge Impulse has removed the built-in YOLOv5 block. Your model will still work, but to train a new model, you'll need to re-upload the YOLOv5 block on Edge Impulse platform. This takes just 5-10 minutes, and you can follow the instructions here.

Let us know if you need any assistance!

Once you have all of the above setup, you should be able to proceed to train your own model using edge impulse and deploy it using Nx AI Manager.

Quick overview

We will demonstrate how to train and deploy your own model step-by-step. And, we will show you how to re-train your model once it has been deployed in-the-field. We will cover the following steps:

Model training using the Edge Impulse platform. Note that we will not provide an elaborate walk through of the amazing capabilities of the Edge Impulse platform; these can be found in the Edge Impulse docs: https://docs.edgeimpulse.com/docs/.

Coupling your Edge Impulse model with Nx AI cloud. We will show how to sync your Edge Impulse model with your model catalog.

Deploying (and testing) your model on your edge device. This section will detail how to deploy your Edge Impulse model effortlessly to your edge device using the Nx AI cloud.

Retraining your model. This step is optional, but cool. Once you have a model setup you can collect new training examples in the field and use these to retrain a model. Once done you can iterate (go back to step 1) and get better!

1. Model training using the Edge Impulse platform

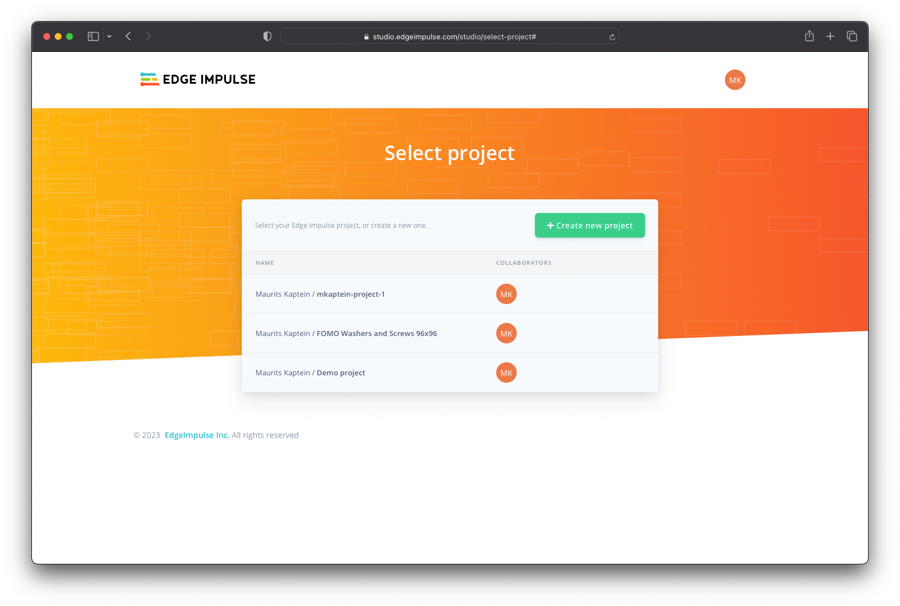

We start the development of a novel edge AI solution by creating a new project on the Edge Impulse platform:

The Edge Impulse platform is very intuitive, and allows you to upload and annotate training examples and to train object detection models. We will focus on the Edge Impulse's Yolov5 model; find a quick getting started guide here: https://docs.edgeimpulse.com/docs/tutorials/detect-objects-using-fomo.

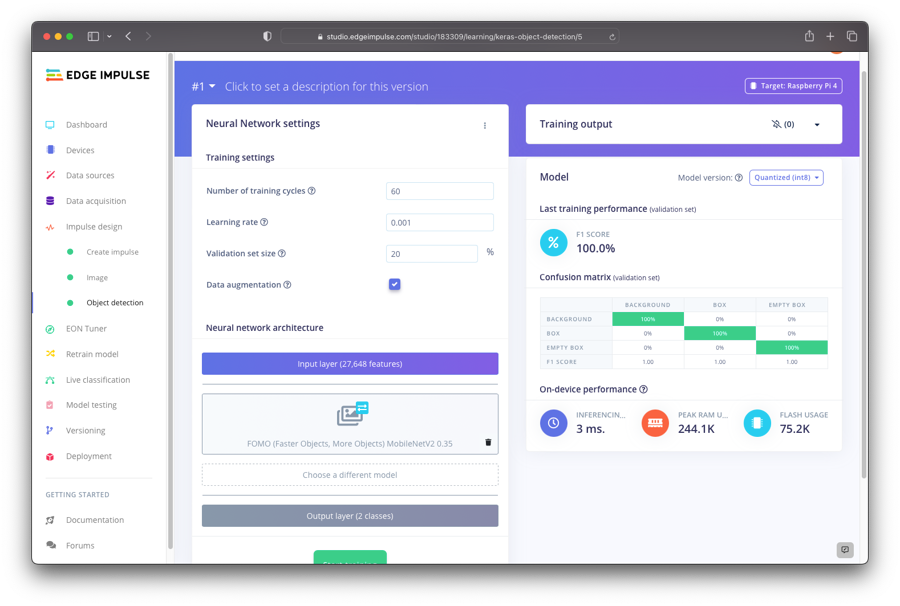

The important bit for this tutorial is to train an object detection model and to select the correct Yolo models. Work through the data acquisition and impulse creation steps in the Edge Impulse platform to get to the object detection model:

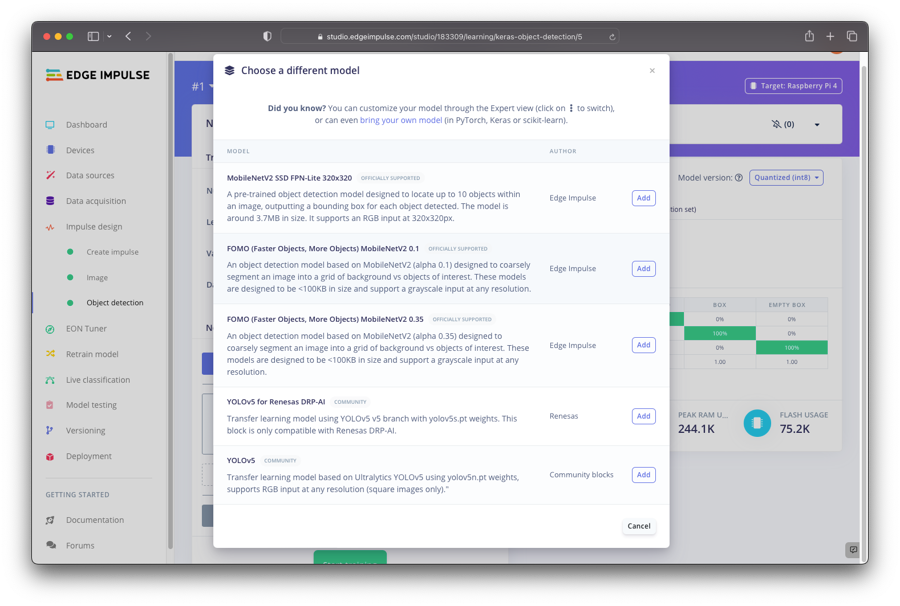

Do make sure to select the FOMO MobileNetV2 (both 0.1 and 0.35), or Yolov5 option. Next, after you have clicked "Start training" and the model training has finished, you are done (for now) on the Edge Impulse platform.

At this point we only support imports of the FOMO MobileNetV2 and Yolov5 from Edge Impulse. We will be adding support for more Edge Impulse models shortly.

2. Coupling your Edge Impulse model with Nx

After training your model, you can leave the Edge Impulse platform (but do leave it open in a tab) and move to https://admin.sclbl.nxvms.com/. After logging in at the Nx AI cloud you will arrive at your dashboard showing your current models and devices (which might both be 0 when you are just getting started). On that page, click the click the "Add a model" button:

You may need to select an organisation first, and then you will arrive at the model upload page from where you can select the "Edge Impulse" type:

At this point you can use your Edge Impulse API key and project ID to import your trained model directly from Edge Impulse.

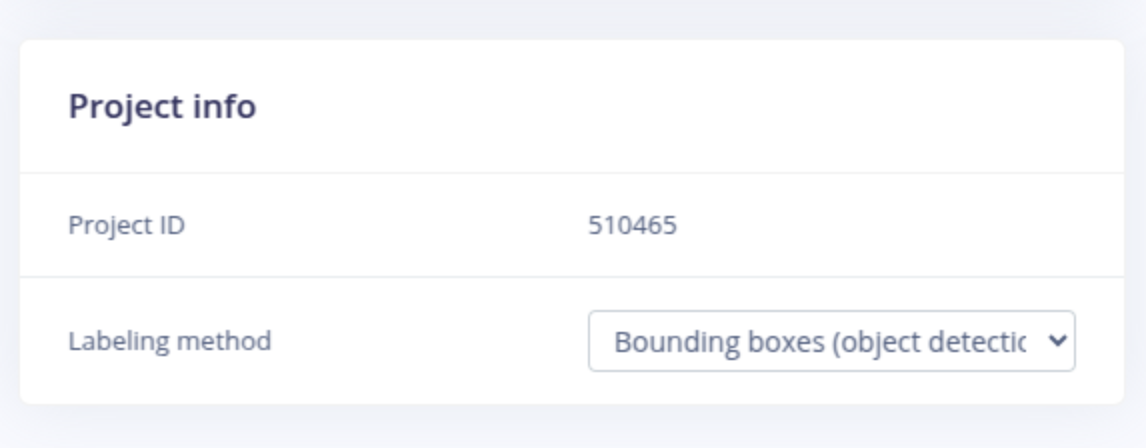

Your Project ID is located on the project info page in a separate box, or as the last item in the URL:

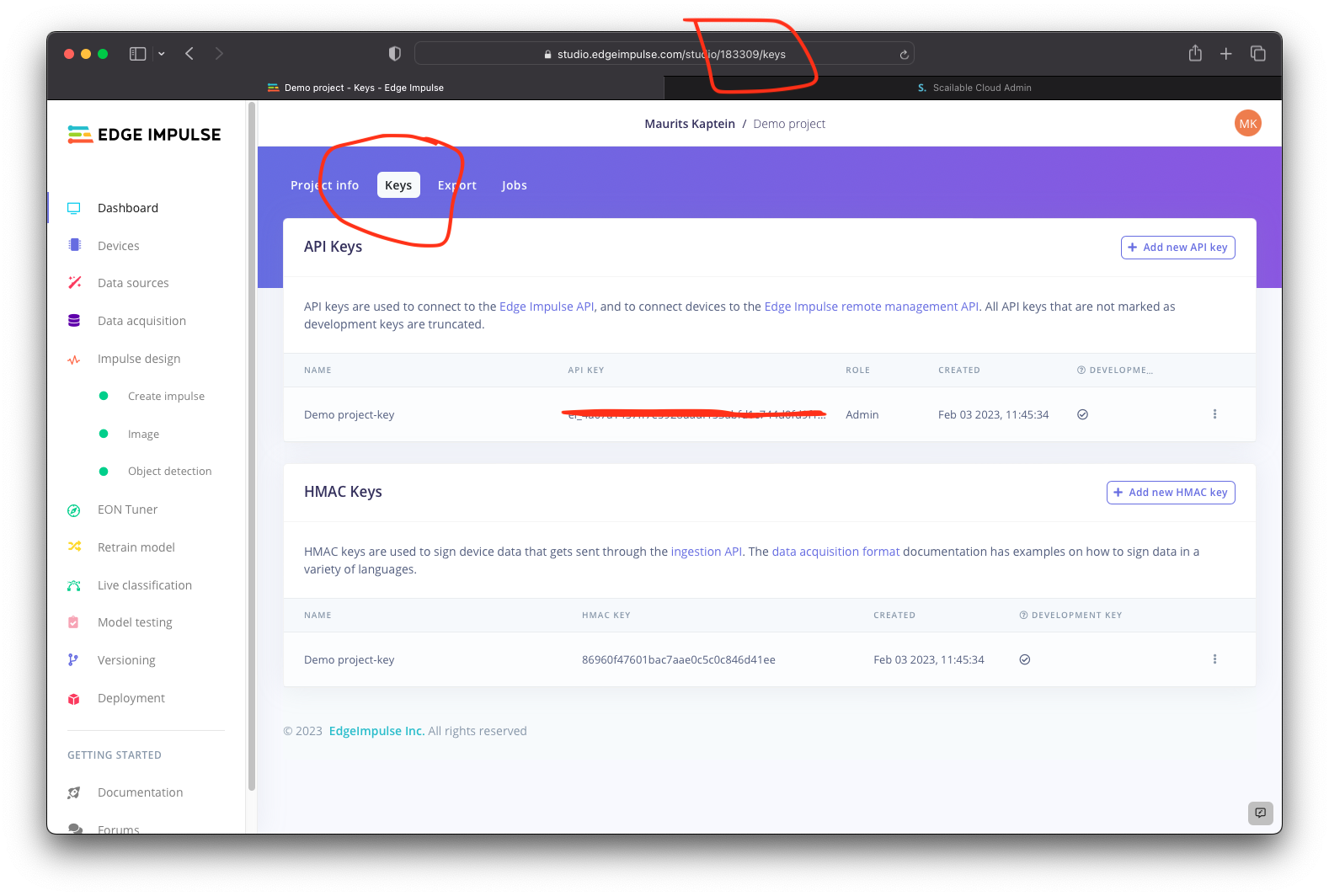

Your API key can be found at your dashboard:

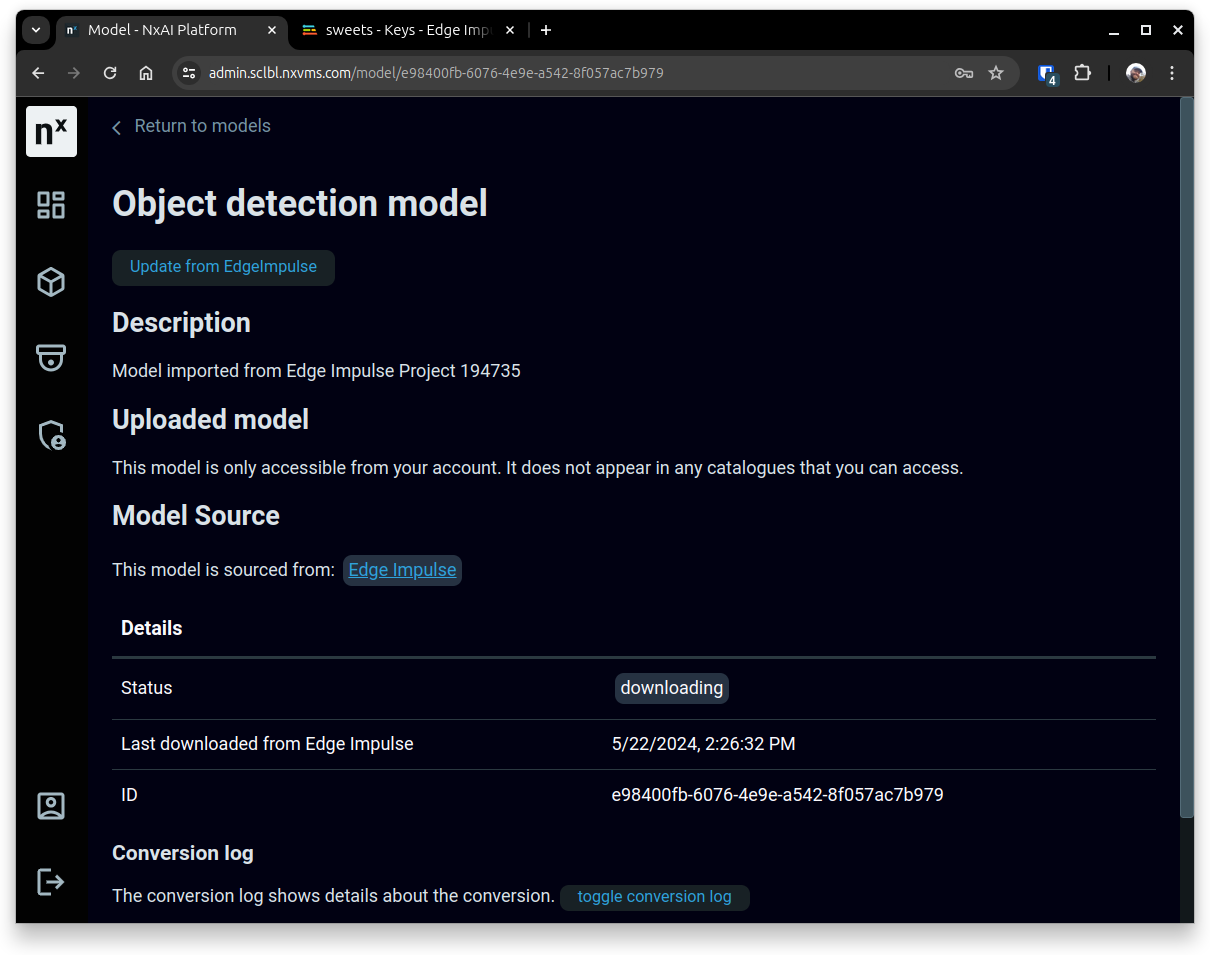

After filling out the API- and project- keys you can click the "Link model" button, and your Edge Impulse model will be imported into your Nx AI library:

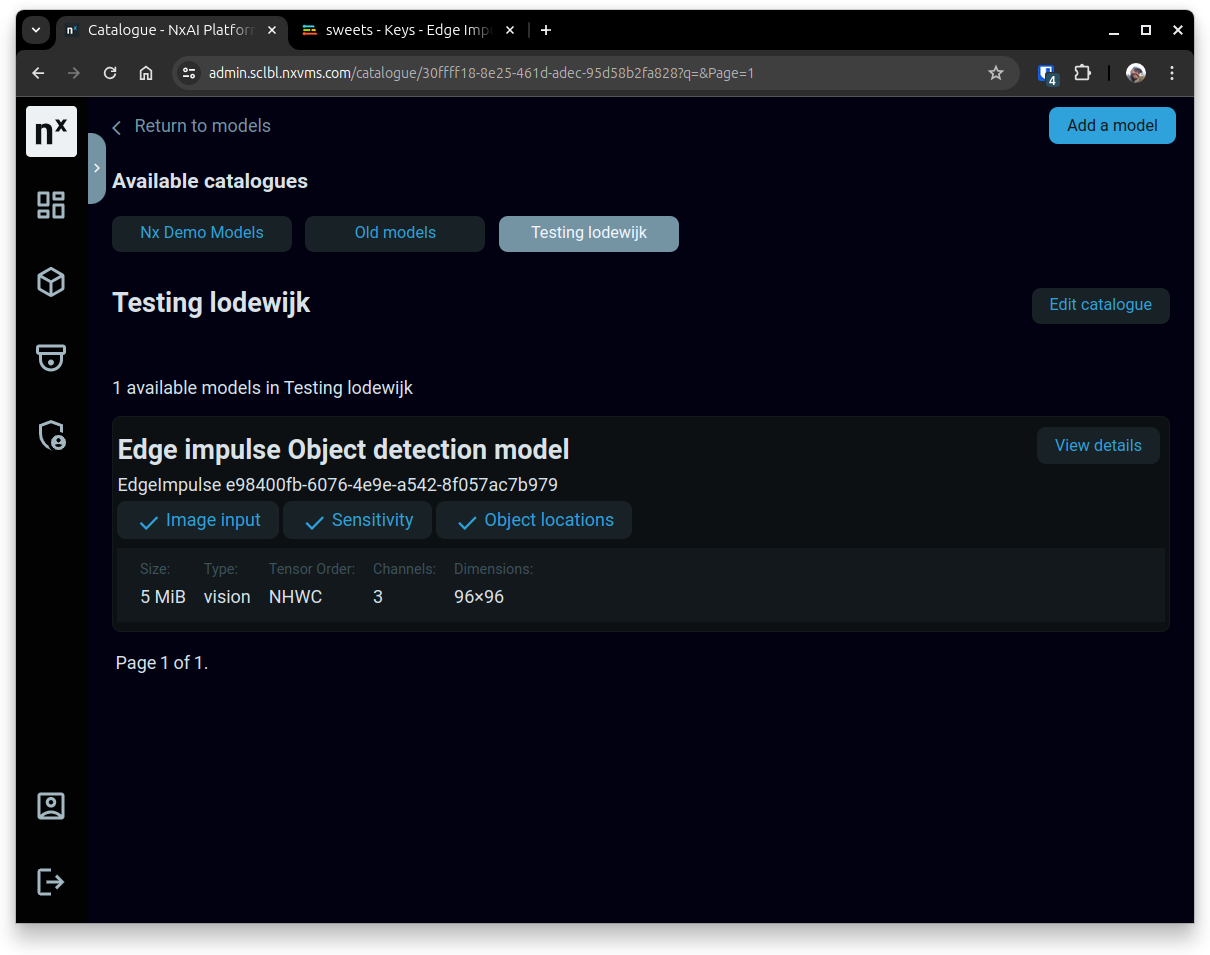

You can obviously change the model name and documentation (as usual), but effectively, after the import, the model is directly available for deployment. Once you click "Return to models" you will see the model on the top of you model list:

You are now ready to deploy your model to your selected edge device.

3. Deploying (and testing) your model on your edge device

In Nx Meta, connect to your system and open the plugin page.

Click "Manage device" and select the model you created.

And click on "Add to pipeline" then the model will be selected and you will return to the plugin.

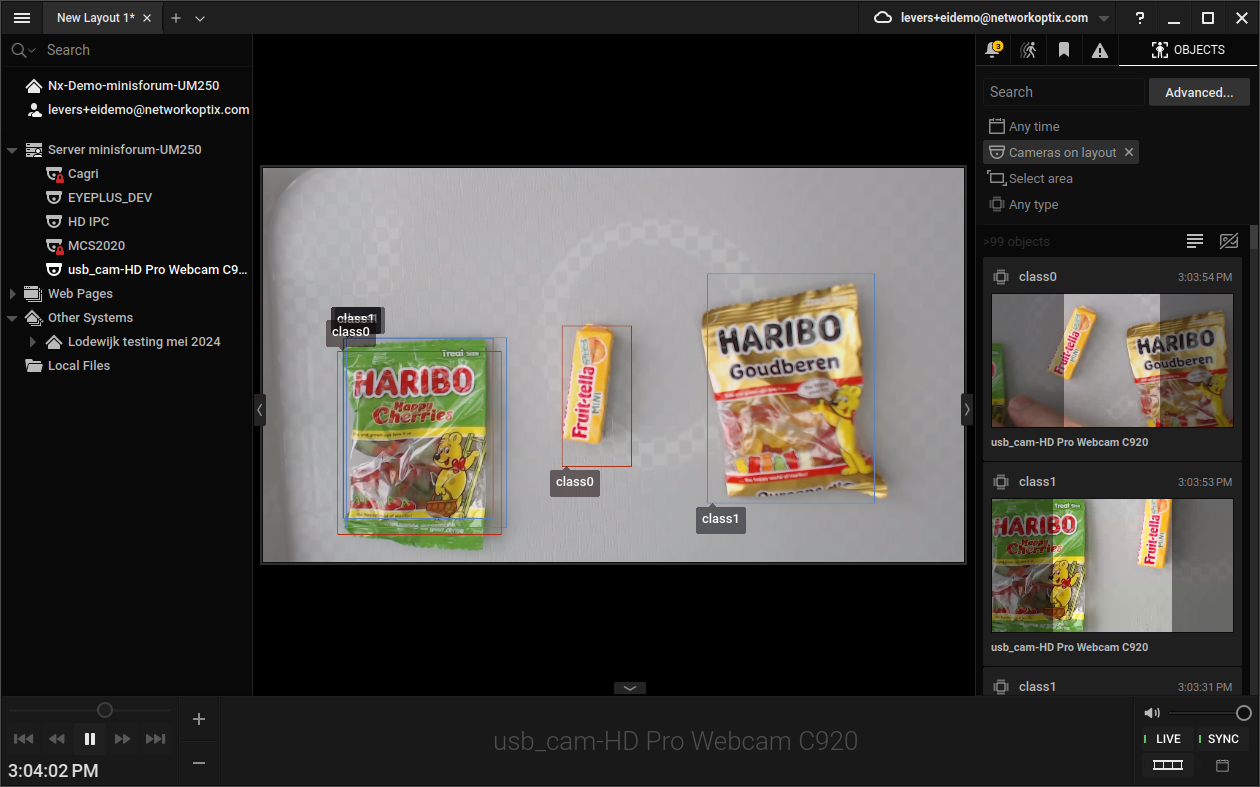

The video feed should then show some detection boxes when you activate the object tab.

That's it really; you have just trained and deployed a pretty nifty AI model to your edge device.

4. Retraining your model

Although steps 1 to 3 basically got you started, there are a few nice tricks you can use to improve your solution over time. Particularly, you can set the on-device AI manager to capture new training images when needed.

Set up a postprocessor from the integration SDK for image uploads. You can set the postprocessor up to send images every N seconds or when the result is below a certain P value.

Let the system run with the postprocessor for a while.

At this point you can navigate back to your Edge Impulse project, label the uploaded images, retrain the model, and then re-deploy your model.

Wrap up

The above covers the basics of "training-using-Edge-Impulse-deploying-using-Nx". Very cool stuff, and in this article we really only scratched the surface of the potential applications.

Last updated