External Postprocessing

This page describes how to implement an external postprocessor to integrate with the Nx AI Manager

It is sometimes desired to add custom or proprietary processing to the inference pipeline. It is therefore possible to add a custom application which receives information from the Nx AI Manager and returns optionally altered information.

Examples are provided in how to create these applications in both C and Python. However these applications can be created using any programming language, as long as the device can execute this program, send it messages over a Unix socket, and receive a response.

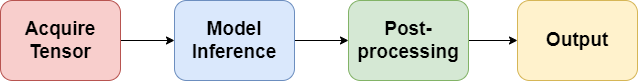

A high-level overview of the inference pipeline is as follows:

The postprocessor can therefore receive the inference results from the model, optionally alter these results, and return them. The changes added by the postprocessor will then be sent to the Network Optix platform, where it can be used to generate bounding boxes or events.

Through the settings, instructions can be provided to the Nx AI Manager on how to start the application. The Nx AI Manager will automatically start the applications once necessary, and terminate them once execution is finished.

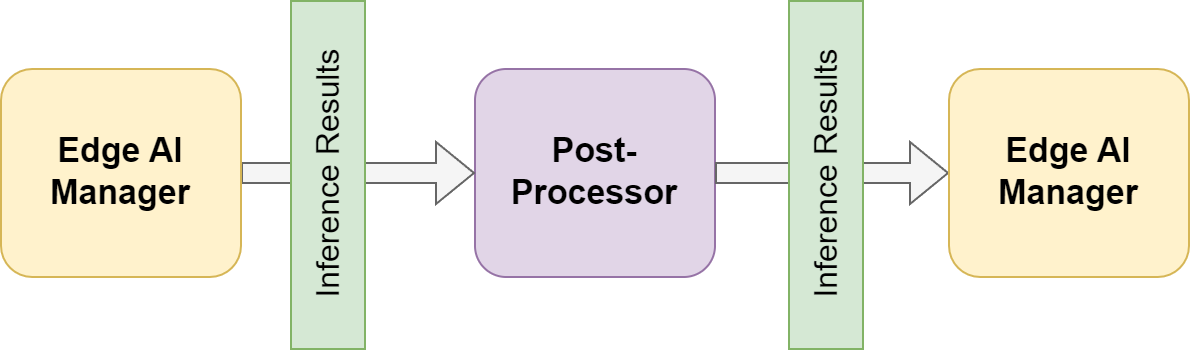

External Postprocessor

A postprocessor receives the inference results as a MessagePack encoded buffer. This message is equivalent to what will be sent to the output. The postprocessor can alter this message and return it. The altered message will then be sent to the Network Optix platform. The returned message should have the same structure as the input message, otherwise the Network Optix platform might be unable to parse it. Examples are provided to show how this structure can be parsed, altered, and written.

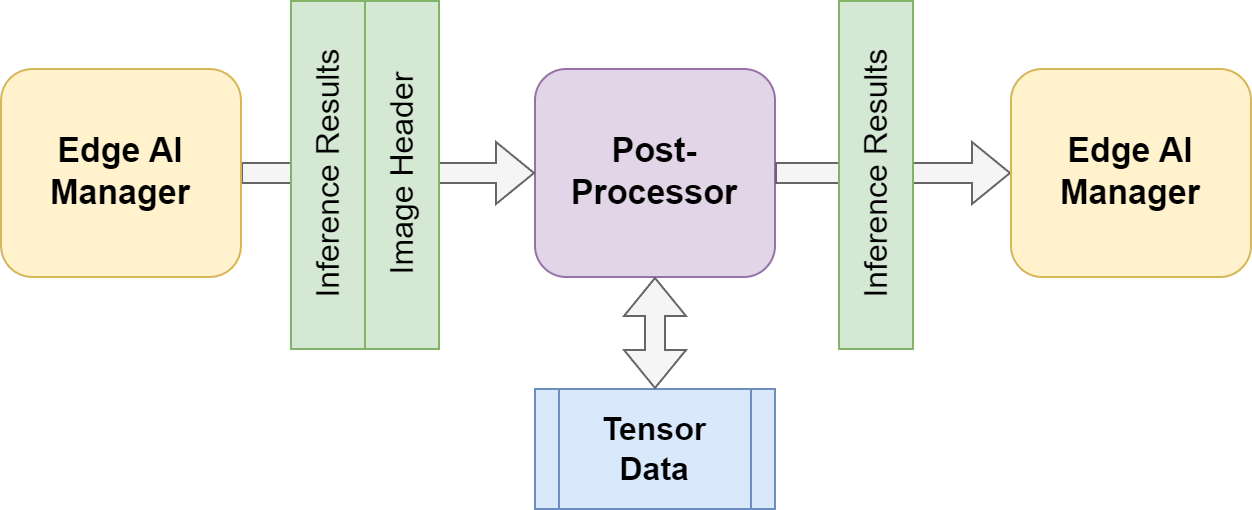

External Tensor Postprocessor

A setting is provided in which the user can indicate that a postprocessor should receive access to the input tensor which the inference results were generated from. This can be useful for many applications, such as investigating the input tensor within the generated bounding boxes, or even create sub-images.

When this setting is enabled, the Nx AI Manager platform will write the input tensor to shared memory, where all external postprocessor can access it. It will then send an additional message to the external postprocessor containing information which can be used to access this shared memory.

The image header message is sent after the inference results message. It is therefore required for the external postprocessor to expect to receive two separate messages before responding with its own message. The image header is also a MessagePack encoded message.

The postprocessor can do additional analysis on the tensor data.

Custom external processing

If this behavior is not the desired behavior, is possible to create your own preprocessor to create a mask or postprocessor to exclude results from an area that you designate.

For more information on custom preprocessors and postprocessors you can checkout the examples in our GitHub repository

Last updated